Imagine a world where machines can learn from their experiences, like humans. This isn’t a sci-fi fantasy but the reality of AI/ML, where data annotation plays the starring role. Think of data annotation as the rigorous training regimen that turns raw data into seasoned athletes, ready to compete in the AI arena. The meticulous process of tagging data with labels teaches AI models to understand our complex world.

From pictures in your phone to posts on social media, unstructured data is everywhere. Data annotation is the magic wand that organizes this chaos, giving AI the ability to recognize patterns and make smart decisions.

For example, in healthcare, it helps create AI systems that can spot diseases from medical images with astonishing accuracy. In the realm of self-driving cars, it’s the annotated data that teaches vehicles to navigate bustling streets safely.

- Autonomous Vehicles: Companies like Tesla annotate road images to train their self-driving algorithms, identifying pedestrians, vehicles, and traffic signals to navigate safely.

- Healthcare Imaging: In medical diagnostics, AI models trained with annotated images can detect diseases from MRI scans, improving patient outcomes. More on AI in medical imaging

Data annotation is not just about making sense of data; it’s about crafting the intelligence that powers AI innovations. As we journey through this article, we’ll uncover the diverse types of data annotation, tackle the challenges it faces, share best practices for quality, and peek into the future of this fascinating field. So get ready to explore the world of data annotation, where every label is a step towards smarter AI solutions.

The Crucial Role of Data Annotation in AI/ML

Data annotation is the unsung hero in the AI/ML saga, the linchpin that holds the potential of intelligent systems together. It’s all about teaching machines to learn from data, shaping the future of technology with every piece of annotated information. But why is it so vital?

- Training AI Models: Just like students need textbooks, AI models need annotated data to learn and understand patterns. Without it, AI is like a ship without a compass, directionless in the vast sea of digital information.

- Enhancing Accuracy: The precision of AI predictions hinges on the quality of data annotation. It’s a simple equation: better annotation equals smarter AI, leading to technologies that can revolutionize industries by making more informed decisions.

- Driving Innovation: From healthcare diagnostics to autonomous vehicles, high-quality data annotation fuels advancements that can change the world. It’s the backbone of AI research and development, enabling machines to tackle complex tasks with human-like intuition.

- Overcoming Data Diversity: In our global village, the variety of data is staggering. Data annotation helps in managing this diversity, ensuring AI systems can understand and act on data from different sources and contexts.

Through examples like the precise labeling of medical images for disease detection or the detailed annotation of street scenes for self-driving cars, we see the profound impact of data annotation. It’s not just a technical task; it’s a foundation for future innovations, making the dream of intelligent, learning machines a reality.

The Spectrum of Data Annotation in AI/ML

Data annotation is not one-size-fits-all; it’s a kaleidoscope of techniques tailored to the unique needs of AI/ML models across various domains. Here’s a glimpse into this vibrant world:

- Image Annotation: The cornerstone of computer vision, image annotation involves labeling elements in pictures to help AI recognize and interpret visual information. Whether it’s identifying pedestrians for autonomous cars or detecting anomalies in medical scans, image annotation provides the eyes for AI to see and understand the world.

- Text Annotation: This involves labeling parts of the text to train models in understanding language nuances. From sentiment analysis in social media monitoring to chatbots that manage customer service, text annotation is the backbone of natural language processing (NLP) applications.

- Audio Annotation: Here, sounds are labeled to assist AI in recognizing voice commands, music genres, or environmental noises. It’s crucial for developing interactive AI assistants and enhancing user experiences with sound-based interfaces.

- Video Annotation: Combining elements of image and audio annotation, video annotation tracks and labels movements and actions over time, essential for surveillance AI, sports analytics, and more.

These annotation types are the building blocks of AI systems, enabling them to learn from and interact with the world in complex and meaningful ways.

Transforming Industries: The Impact of Data Annotation in AI/ML Applications

In the world of machine learning, data annotation is pivotal, catalyzing innovations across various industries. Its influence significantly enhances the precision, efficiency, and creative progress of sector-specific applications.

- Healthcare Breakthroughs: Data annotation refines AI in medical diagnostics, improving the accuracy of image analyses and predictive patient care.

- Retail Revolution: It personalizes shopping experiences by analyzing consumer behavior, enhancing inventory management, and forecasting trends.

- Automotive Safety: Drives advancements in autonomous vehicle technology, increasing recognition capabilities and ensuring safer roads.

- Financial Foresight: Bolsters risk assessment and fraud detection in financial services, offering customized solutions.

- Agricultural Innovation: Powers precision farming, optimizing crop monitoring, disease detection, and resource management for sustainable growth.

Navigating the Challenges of Data Annotation

Ensuring consistently high-quality annotations across large datasets poses a significant challenge in quality control, as poor-quality data can lead to inaccurate AI models, emphasizing the importance of robust quality control mechanisms. Additionally, scalability becomes a critical concern as AI/ML projects expand, with the volume of data needing annotation increasing exponentially, requiring organizations to balance speed and accuracy effectively. Moreover, annotator expertise plays a crucial role, particularly in specialized fields like medicine or linguistics, where expert annotators are necessary to provide accurate and reliable data. Furthermore, with the rise of regulations such as GDPR, prioritizing data privacy and security, especially concerning sensitive information, is imperative. Addressing these challenges demands a strategic approach that incorporates the right tools, processes, and skilled professionals to ensure the success of AI/ML projects.

Future Outlook

The future of data annotation in AI/ML is bursting with exciting possibilities as the field continues to evolve alongside advancements in AI and ML technologies. We’re witnessing some fascinating trends that are set to redefine how we approach data annotation. Picture this: automation and AI-assisted annotation are revolutionizing the scene, slashing both time and costs while ramping up scalability. And let’s not forget about the next-gen tools and platforms in the works, promising greater accuracy, efficiency, and user-friendliness. But wait, there’s more! We’re also diving into the realm of multi-data integration, where text, images, and audio converge to create data sets that are richer and more robust than ever before. And let’s not overlook the growing importance of ethical and responsible annotation practices, ensuring fairness, impartiality, and privacy every step of the way. These trends aren’t just shaping the future of data annotation- they’re fuelling a whole new era of possibilities for AI/ML technologies. Get ready to be amazed!

In an era where technology redefines boundaries, leaders are constantly seeking that next breakthrough to vault their businesses ahead of the curve. Enter AI-driven personalization, not just a buzzword but a transformative strategy that’s reshaping how businesses interact with their customers, streamline their operations, and out manoeuvre the competition. This exploration is more than just an overview; it’s your guide to integrating AI into your business strategy, making every customer interaction not just a transaction, but a personalized journey.

Why AI and Personalization?

Imagine a world where your business not only anticipates the needs of your customers but also delivers personalized solutions before, they even articulate them. This isn’t the plot of a sci-fi novel; it’s the reality which AI personalization makes possible today. It’s about turning data into actionable insights, creating a unique customer journey that boosts engagement, loyalty and ultimately your top line & bottom line alike. From customized marketing campaigns to personalized product recommendations, AI is the linchpin in crafting experiences that resonate on a personal level, especially for Gen Z, a generation accustomed to tailored digital experiences.

The Transformational Power of AI in Business

- Customer Experience Reinvented: AI enables a nuanced understanding of customer behaviors and preferences, allowing businesses to tailor experiences that are not just satisfying but delightfully surprising.

- Operational Efficiency Unleashed: Beyond customer-facing features, AI drives internal efficiencies, optimizing everything from supply chain logistics to customer service operations, ensuring that resources are allocated where they generate the most value.

- Data-Driven Decisions: With AI, data isn’t just collected; it’s deciphered into strategic insights, empowering leaders to make informed decisions that drive growth and innovation.

How to implement AI-powered personalization?

Implementing an AI-driven personalization approach requires a strategic and thoughtful process. Here are key steps to establish a successful AI-based personalization framework:

- Establish clear goals: The initial step involves identifying the specific reasons behind adopting personalization. Businesses might aim to boost their revenue, enhance customer satisfaction, or minimize customer turnover. It’s crucial to have a clear understanding of these goals to steer the strategy’s direction and measure its success effectively.

- Prioritize data quality: The success of any AI-driven personalization initiative heavily depends on the quality and comprehensiveness of customer data. Organizations should focus on creating systems for gathering and maintaining accurate and relevant data, which will serve as the foundation for understanding customer behaviors and preferences.

- Continuous optimization: It’s essential to regularly evaluate and refine the personalization strategy. By leveraging customer feedback, businesses can make necessary adjustments, ensuring the strategy remains relevant and effective over time.

- Maintain transparency: Establishing trust with customers is fundamental, and this can be achieved by being open about how their data is collected and used for personalization. Clear privacy policies and explanations regarding the utilization of customer information for tailored experiences are vital.

- Ensure omnichannel personalization: To provide a seamless and customized customer experience, personalization should be consistent across all points of interaction with customers, including emails, social media, and physical store visits. Integrating personalization throughout these channels ensures a uniform and personalized customer journey.

Navigating the Implementation Journey

Implementing AI-driven personalization isn’t without its challenges. It requires a robust data infrastructure, a clear strategy aligned with business objectives, and a culture of innovation that embraces digital transformation. Yet, the journey from inception to implementation is filled with opportunities to redefine your industry, engage customers on a new level, and set a new standard for excellence in your operations.

Challenges and the opportunities

The adoption of AI-driven personalization faces key challenges, including ensuring data privacy and ethics, managing implementation costs, and maintaining transparency to build trust. Despite these hurdles, there are opportunities for innovation and customer relationship enhancement. Businesses that navigate these challenges with ethical AI practices and transparent data handling can differentiate their brand, foster customer loyalty, and achieve sustainable growth, effectively balancing innovation with ethical responsibility.

Leading Examples of AI-Powered Personalization in Action

In the realm of AI-driven personalization, several companies stand out for their innovative approaches to enhancing customer experiences and achieving remarkable business outcomes. Here are a few notable examples:

- Netflix’s Customized Viewing Experience: Netflix, a premier subscription-based streaming service, leverages machine learning algorithms to curate personalized TV show and movie recommendations for its subscribers. By analysing viewing histories and individual preferences, Netflix ensures that its content recommendations keep users engaged and subscribed. This strategy is incredibly effective, with personalized recommendations accounting for approximately 80% of the content streamed on the platform. Such tailored experiences have been instrumental in Netflix’s ability to maintain and grow its subscriber base over time.

- Salesforce Einstein for CRM Personalization: Salesforce Einstein integrates AI into its customer relationship management (CRM) platform, offering personalized customer insights that businesses can use to tailor their sales, marketing, and service efforts. By analysing customer data, Salesforce Einstein provides predictive scoring, lead scoring, and automated recommendations, helping B2B companies enhance their customer engagement and streamline their operations, much like Netflix’s approach to content personalization.

- LinkedIn Sales Navigator for Personalized B2B Sales: LinkedIn Sales Navigator leverages AI to offer personalized insights and recommendations to sales professionals, helping them find and engage with potential B2B clients more effectively. By analysing data from LinkedIn’s vast network, Sales Navigator can suggest leads and accounts based on the sales team’s preferences, search history, and past success, much like Spotify uses listening habits to personalize playlists for its users.

Following the footsteps of these industry leaders in leveraging AI for enhanced customer experiences, NRich by Navikenz emerges as another significant example. Integrating seamlessly into the landscape of AI-driven personalization for B2B marketing, it offers a nuanced approach to enhancing product content, aligning with the evolving needs of businesses seeking to personalize their customer interactions.

Conclusion

In the evolving landscape of digital business, the focus shifts from merely acquiring AI technology to securing meaningful outcomes. This shift emphasizes the importance of selecting AI partners who align with organizational goals and deliver real value, beyond just technological advancements. AI-powered personalization stands at the forefront of this transformation, offering targeted solutions that resonate with consumer desires in a saturated market. Emphasizing outcomes over technology enables businesses to offer personalized experiences that drive customer satisfaction and long-term growth. As we embrace this new era let’s think about investing in AI to achieve outcomes that enhance customer experiences and propel business growth.

Introduction: The Evolution of Search in the Digital Age

How often have you found yourself lost in the maze of online search results, wishing for a more intuitive way to find exactly what you need? In our rapidly evolving digital age, this quest for more refined human-computer interactions has led to ground-breaking advancements in search technologies. At the forefront is Neural Entity-Based Contextual Searches (NEBCS), a cutting-edge technology set to revolutionize our interactions with digital platforms. Let’s explores the inner workings of NEBCS, shedding light on how it transforms search experiences and reshapes our digital interactions.

Understanding the Basics: The What and How of NEBCS

At its core, NEBCS represents a significant leap from traditional search methods. Unlike the older ‘keyword-centric’ approach, NEBCS leverages advanced neural network technologies to understand and interpret the context and entities within a user’s query. Imagine asking a wise sage instead of a library index – that’s the kind of intuitive understanding we’re talking about.

The Evolution and Principles of NEBCS

Neural Entity-Based Contextual Searches are grounded in the field of Named Entity Recognition (NER), a critical task in Natural Language Processing (NLP) that involves identifying important objects, such as persons, organizations, and locations, from text. The ‘context’ is the framework surrounding these entities, providing additional meaning. For instance, when you search for “Apple,” are you referring to the fruit or the tech giant? NEBCS understands the difference based on context clues in your query. Furthermore, recent advancements in NLP and machine learning, particularly neural networks, have significantly enhanced the capabilities of NER systems, making them essential for many downstream NLP applications like AI assistants and search engines.

Advancements in NER Techniques

Recent research indicates that incorporating document-level contexts can markedly improve the performance of NER models. Techniques like feature engineering, which includes syntactical, morphological, and contextual features, play a critical role in NER system efficacy. Additionally, the utilization of pre-trained word embeddings and character-level embeddings has become a standard practice, providing more nuanced and accurate entity recognition.

From Traditional to Contextual Searches

Traditional search technologies primarily relied on keyword matching, but contextual search focuses on understanding the user-generated query’s context, including the original intent, to provide more relevant results. This evolution is propelled by advancements in computational power, computer vision, and natural language processing/generation.

The Neural Magic: Understanding the Role of AI and Machine Learning

The heart of NEBCS lies in its use of Artificial Intelligence (AI) and Machine Learning (ML). These technologies enable the system to learn from vast amounts of data, recognize patterns, and make intelligent guesses about what you’re searching for. It’s like having a detective piecing together clues to solve the mystery of your query.

The User Experience: How NEBCS Changes Our Search Behavior

For users, NEBCS is a game changer. It offers a more intuitive, efficient, and accurate search experience. No more sifting through pages of irrelevant results. NEBCS understands the intent behind your query, presenting you with the most pertinent information. It’s like having a personal librarian who knows exactly what you need, even when you’re not sure yourself.

Real-World Applications: NEBCS in Action

Imagine you’re planning a trip to Paris and search for “best coffee shops near the Louvre.” Instead of just matching keywords, NEBCS recognizes “Paris,” “Louvre,” and “coffee shops” as entities and understands you’re looking for recommendations nearby, offering tailored results. The potential applications in e-commerce, research, and personalized services are limitless. This transformative capability of NEBCS paves the way for its integration into various sectors, particularly in enhancing decision-making and operational efficiency.

Enhancing Decision-Making and Operational Efficiency

Contextual searches can significantly improve various business processes. For instance, they can assist in quickly locating relevant information from large databases, thereby reducing operational overhead and expediting decision-making. This technology’s adaptability to different datasets and entity types makes it applicable across various industries.

Real-World Implementations

Enterprises have successfully implemented NEBCS in diverse scenarios, such as extracting relevant geological information from unstructured images and documents. This approach leverages deep learning and NLP techniques to parse data in real-time, improving efficiency and generating actionable insights.

The Challenges and Future Path

While NEBCS offers numerous benefits, challenges remain in understanding the mechanics behind deep learning algorithms and setting up scalable data infrastructures. Additionally, there is a growing need to address the ‘black box’ nature of AI systems, fostering greater trust in AI-driven processes.

Overcoming Limitations

Despite the popularity of distributional vectors used in NEBCS, limitations exist in their ability to predict distinct conceptual features. Addressing these challenges through research and development is crucial for the continuous improvement of NEBCS.

Conclusion: Embracing the NEBCS Revolution

As we stand on the brink of this new era in search technology, it’s clear that Neural Entity-Based Contextual Searches are not just a fleeting trend but a fundamental shift in how we access information. By understanding and embracing this technology, we open ourselves to a world of possibilities where the right information is just a query away. The future of search is here, and it’s more intuitive, efficient, and aligned with our needs than ever before.

Did you know?

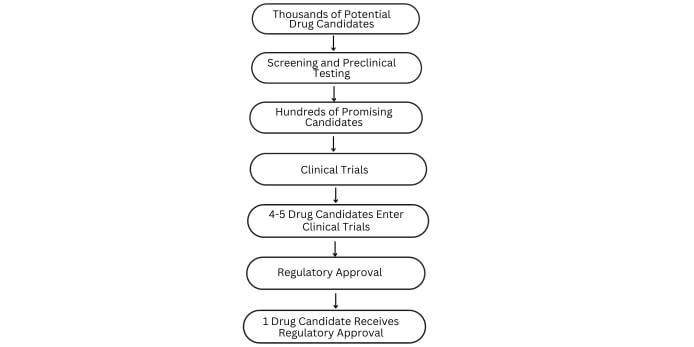

It can take up to 12 years to bring a new drug to market, from research and development to clinical trials and regulatory approval.

The cost of developing a new drug can vary widely but estimates suggest that it can cost anywhere from $1 billion to $2.6 billion to bring a single drug to market, depending on factors such as the complexity of the disease being targeted and the length of clinical trials.

The success rate for drug candidates that enter clinical trials is typically low, with only about 10-15% of candidates ultimately receiving regulatory approval.

AI ML in drug discovery

The majority of drug candidates fail during preclinical testing, which is typically the first step in the drug development process. Only about 5% of drug candidates that enter preclinical testing make it to clinical trials.

Drug Discovery Lifecycle

AI ML in drug discovery

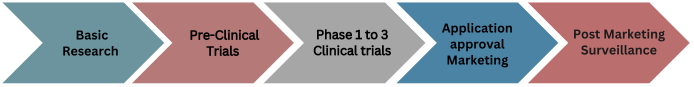

Basic Research: The drug discovery process begins with basic research, which involves identifying a biological target that is implicated in a disease. Researchers then screen large numbers of chemical compounds to find ones that interact with the target.

Pre-Clinical Trials: they are generally called preclinical trials Once a promising drug candidate has been identified, it must undergo non-clinical trials to evaluate its safety and efficacy in animals. This stage includes testing for toxicity, pharmacokinetics, and pharmacodynamics.

Phase 1 to 3 Clinical Trials:

- Phase 1 Clinical Trials:

Phase 1 trials are the first step in evaluating the safety and tolerability of a drug candidate in humans. These trials typically involve a small group of healthy volunteers, usually ranging from 20 to 100 participants. The primary focus is to assess the drug’s pharmacokinetics (how the drug is absorbed, distributed, metabolized, and excreted), pharmacodynamics (how the drug interacts with the body), and determine the safe dosage range.

- Phase 2 Clinical Trials:

Once a drug candidate successfully passes Phase 1 trials, it moves on to Phase 2 trials, which involve a larger number of patients. These trials aim to assess the drug’s efficacy and further evaluate its safety profile. Phase 2 trials can involve several hundred participants and are typically divided into two or more groups. Patients in these groups may receive different dosages or formulations of the drug, or they may be compared to a control group receiving a placebo or an existing standard treatment. The results obtained from Phase 2 trials help determine the optimal dosing regimen and provide initial evidence of the drug’s effectiveness.

- Phase 3 Clinical Trials:

Phase 3 trials are the final stage of clinical testing before seeking regulatory approval. They involve a larger patient population, often ranging from several hundred to several thousand participants, and are conducted across multiple clinical sites. Phase 3 trials aim to further confirm the drug’s effectiveness, monitor side effects, and collect additional safety data in a more diverse patient population. These trials are crucial in providing robust evidence of the drug’s benefits and risks, as well as determining the appropriate usage guidelines and potential adverse reactions.

Application Approval Marketing: If a drug candidate successfully completes clinical trials, the drug sponsor can submit a New Drug Application (NDA) or Biologics License Application (BLA) to the regulatory agency for approval. If the application is approved, the drug can be marketed to patients.

Post-Marketing Surveillance: Once a drug is on the market, post-marketing surveillance is conducted to monitor its safety and efficacy in real-world settings. This includes ongoing pharmacovigilance activities, such as monitoring for adverse events and drug interactions, and conducting post-marketing studies to evaluate the long-term safety and efficacy of the drug.

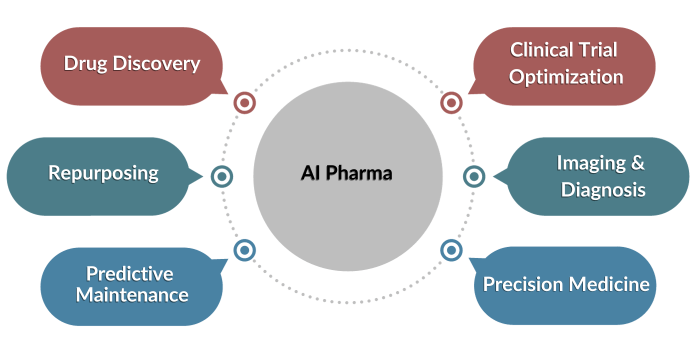

Role of Machine Learning & AI in the Pharma Drug Lifecycle:

ML algorithms can analyze large and complex datasets, identify patterns and trends, and make predictions or decisions based on this analysis. ML is a rapidly evolving field, with new techniques and algorithms being developed all the time and has the potential to transform the way we live and work.

How does ML solve the basic drug discovery problems?

The role of machine learning (ML) in drug discovery has become increasingly important in recent years. ML can be applied to various stages of the drug discovery process, from target identification to clinical trials, to improve the efficiency and success rate of drug development.

Stages of drug discovery process:

| Phase | Goal |

| Target Identification | Find all targets and eliminate wrong targets |

| Lead discovery and optimization | Identify compounds and promising molecules |

| Preclinical Development Stage | Eliminate molecules and analyze the safety of potential drug |

In the target identification stage, ML algorithms can analyze large-scale genomics and proteomics data to identify potential drug targets. This can help researchers identify novel targets that are associated with specific diseases and develop drugs that target these specific pathways.

In the lead discovery stage, ML can be used to screen large chemical libraries to identify compounds with potential therapeutic properties. ML algorithms can analyze the chemical structures and properties of known drugs and identify similar compounds that may have therapeutic potential. This can help accelerate the discovery of new drug candidates and reduce the time and cost of drug development.

In the lead optimization stage, ML can be used to predict the properties of potential drug candidates, such as their pharmacokinetics and toxicity, based on their chemical structures. This can help researchers prioritize and optimize the most promising compounds for further development, leading to more efficient drug development.

In the preclinical development stage, ML can be used to analyze the results of animal studies and predict the safety and efficacy of potential drug candidates in humans. This can help identify potential safety issues early in the development process and reduce the risk of adverse effects in human trials.

Advancements in Clinical Trials and Drug Safety with Machine Learning (ML)

Applications of ML in Clinical Trials:

ML algorithms can be used to optimize the design and execution of clinical trials. They can analyze patient data and identify suitable participants based on specific criteria, leading to more efficient and targeted recruitment. ML can also assist in patient stratification, helping researchers identify subpopulations that may respond better to the drug being tested. Furthermore, ML algorithms can analyze clinical trial data to predict patient outcomes, assess treatment response, and detect potential adverse effects.

ML in Drug Safety Assessment:

ML techniques can aid in the analysis of large datasets to identify patterns and detect safety signals associated with drug usage. By analyzing real-world data, including electronic health records and post-marketing surveillance data, ML algorithms can help identify potential adverse reactions and drug-drug interactions. This information can contribute to improving drug safety monitoring and post-market surveillance efforts.

Connections with Computer-Aided Drug Design (CADD) and Structure-Based Drug Design:

ML is closely related to CADD and structure-based drug design methodologies. CADD utilizes ML algorithms to analyze chemical structures, predict compound properties, and assess their potential as drug candidates. ML can also assist in virtual screening, where large chemical libraries are screened computationally to identify molecules with desired properties. Furthermore, ML can be employed to model protein structures and predict protein-ligand interactions, aiding in the design of new drug candidates.

How is AI/ML currently being applied in the pharmaceutical industry?

AI ML in drug discovery

Drug Discovery:

AI/ML algorithms can identify potential drug targets, predict drug efficacy, toxicity, and side effects, which can reduce the time and cost of drug discovery. ML algorithms can analyze vast amounts of data, including gene expression, molecular structure, and biological pathway information, to generate new hypotheses about drug targets and drug interactions. Furthermore, AI/ML can predict which drug candidates have the best chances of success, increasing the likelihood of approval by regulatory agencies.

Clinical Trial Optimization:

AI/ML can help optimize clinical trials by identifying suitable patient populations, predicting treatment response, and identifying potential adverse events. By analyzing patient data, including clinical data, genomic data, and real-world data, AI/ML can identify subpopulations that are more likely to benefit from the drug and optimize the dosing and administration of the drug. Moreover, AI/ML can identify potential adverse events that may have been overlooked in traditional clinical trial designs.

Precision Medicine:

AI/ML can be used to analyze patient data, such as genomic, proteomic, and clinical data, to identify personalized treatment options based on individual patient characteristics. AI/ML can help identify genetic variations that may affect the efficacy or toxicity of a drug, leading to more targeted and personalized treatments. For instance, ML algorithms can analyze patient data and predict which patients are more likely to benefit from immunotherapy treatment for cancer.

Real-world Data Analysis:

AI/ML can be used to analyze large amounts of real-world data, such as electronic health records and claims data, to identify patterns and insights that can inform drug development and patient care. For example, AI/ML can help identify the causes of adverse events, such as drug-drug interactions, leading to better post-market surveillance and drug safety.

Drug Repurposing:

AI/ML can be used to identify existing drugs that can be repurposed for new indications, which can help reduce the time and cost of drug development. ML algorithms can analyze large amounts of data, including molecular structure, clinical trial data, and real-world data, to identify drugs that have the potential to treat a specific disease.

Imaging and Diagnosis:

AI/ML can be used to analyze medical images, such as CT scans and MRI scans, to improve diagnosis accuracy and speed. AI/ML algorithms can analyze large amounts of medical images and detect subtle changes that may be missed by human radiologists. For instance, AI/ML can analyze medical images and identify early signs of Alzheimer’s disease or heart disease.

Predictive Maintenance:

AI/ML can be used to monitor equipment and predict when maintenance is needed, which can help reduce downtime and improve efficiency. ML algorithms can analyze data from sensors and predict when equipment is likely to fail, leading to more efficient maintenance and reduced downtime.

Some examples of the used AI and ML technology in the pharmaceutical industry

| Tools | Details | Website URL |

|---|---|---|

| DeepChem | MLP model that uses a python-based AI system to find a suitable candidate in drug discovery | https://github.com/deepchem/deepchem |

| DeepTox | Software that predicts the toxicity of total of 12,000 drugs | www.bioinf.jku.at/research/DeepTox |

| DeepNeuralNetQSAR | Python-based system driven by computational tools that aid detection of the molecular activity of compounds | |

| Organic | A molecular generation tool that helps to create molecules with desired properties | https://github.com/aspuru-guzik-group/ORGANI |

| PotentialNet | Uses NNs to predict binding affinity of ligands | https://pubs.acs.org/doi/full/10.1021/acscentsci.8b00507 |

| Hit Dexter | ML technique to predict molecules that might respond to biochemical assays | http://hitdexter2.zbh.uni-hamburg.de |

| DeltaVina | A scoring function for rescoring drug–ligand binding affinity | https://github.com/chengwang88/deltavina |

| Neural graph fingerprint | Helps to predict properties of novel molecules | https://github.com/HIPS/neural-fingerprint |

| AlphaFold | Predicts 3D structures of proteins | https://deepmind.com/blog/alphafold |

| Chemputer | Helps to report procedure for chemical synthesis in standardized format | https://zenodo.org/record/1481731 |

These examples demonstrate the application of AI and ML in different stages of the pharmaceutical drug lifecycle, from drug discovery to safety assessment and protein structure prediction.

Use cases of AI/ML Technology in Pharmaceutical Industry

- Protein Folding: AI and ML algorithms can predict the way proteins will fold, which is critical in drug discovery. For example, Google’s DeepMind used AI to solve a 50-year-old problem in protein folding, which could lead to the development of new drugs.

- Adverse Event Detection: AI and ML algorithms can analyze patient data and detect adverse events associated with medications. For example, IBM Watson Health uses AI to detect adverse events in real-time, which can improve patient safety and help pharmaceutical companies respond quickly to potential issues.

- Supply Chain Optimization: AI and ML algorithms can optimize supply chain processes, such as demand forecasting and inventory management. For example, Merck uses AI to predict demand for their products and optimize their supply chain operations.

- Quality Control: AI and ML algorithms can be used to monitor manufacturing processes and detect defects or quality issues in real-time. For example, Novartis uses AI to monitor their manufacturing processes and detect potential quality issues before they become major problems.

- Drug Pricing: AI and ML algorithms can analyze market data and predict the optimal price for drugs. For example, Sanofi uses AI to optimize drug pricing based on factors such as market demand, patient access, and the cost of production.

AI/ML has become an essential tool in the pharmaceutical industry and R&D. The use of AI/ML can accelerate drug discovery, optimize clinical trials, personalize treatments, and improve patient outcomes. Moreover, AI/ML can analyze large amounts of data and identify patterns and insights that may have been missed by traditional methods, leading to better drug development and patient care. The future of AI/ML in pharma and R&D is promising, and it is expected to revolutionize the industry and improve patient outcomes.

Welcome to the intersection of advanced technology and traditional agriculture! In this blog, we will explore the integration of artificial intelligence (AI) with agricultural practices, uncovering its remarkable potential and practical applications. The blog will elucidate how AI is reshaping farming, optimizing crop production, and charting a path for the future of sustainable agriculture. It is worth noting that the anticipated global expenditure on smart, connected agricultural technology is forecasted to triple by 2025, resulting in a substantial revenue of $15.3 billion. According to a report by PwC, the IoT-enabled Agricultural (IoTAg) monitoring segment is expected to reach a market value of $4.5 billion by 2025. As we embark on this journey, brace yourself for the extraordinary ways in which AI is metamorphosing the agricultural landscape.

AI in Agriculture: A Closer Look

Personalized Training and Educational Content

Cultivating Agricultural Knowledge, AI-driven virtual agents serve as personalized instructors in regional languages, addressing farmers’ specific queries and educational requisites. These agents, equipped with extensive agricultural data derived from academic institutions and diverse sources, furnish tailored guidance to farmers. Whether it pertains to transitioning to new crops or adopting Good Agricultural Practices (GAP) for export compliance, these virtual agents offer a trove of knowledge. By harnessing AI’s extensive reservoir of information, farmers can enhance their competencies, make informed decisions, and embrace sustainable practices.

From Farm to Fork

AI-Enhanced Supply Chain Optimization In the contemporary world, optimizing supply chains is paramount to delivering fresh and secure produce to the market. AI is reshaping the operational landscape of agricultural supply chains. By leveraging AI algorithms, farmers and distributors gain unparalleled visibility and control over their inventories, thereby reducing wastage and augmenting overall efficiency.

A case in point is the pioneering partnership between Walmart and IBM, resulting in a ground-breaking system that combines blockchain and AI algorithms to enable end-to-end traceability of food products. Consumers can now scan QR codes on product labels to access comprehensive information concerning the origin, journey, and quality of the food they procure. This innovation affords consumers enhanced transparency and augments trust in the supply chain.

Drone Technology and Aerial Imaging

Enhanced Crop Monitoring and Management Drone technology has emerged as a transformative force in agriculture, revolutionizing crop management methodologies. AI-powered drones, equipped with high-resolution cameras and sensors, yield invaluable insights for soil analysis, weather monitoring, and field evaluation. By capturing aerial imagery, these drones facilitate precise monitoring of crop health, early detection of diseases, and identification of nutrient deficiencies. Moreover, they play an instrumental role in effective plantation management and pesticide application, thereby optimizing resource usage and reducing environmental impact. The amalgamation of drone technology and artificial intelligence empowers farmers with real-time data and actionable insights, fostering more intelligent and sustainable agricultural practices.

AI for Plant Identification and Disease Diagnosis

AI-driven solutions play a pivotal role in the management of crop diseases and pests. By harnessing machine learning algorithms and data analysis, farmers receive early warnings and recommendations to mitigate the impact of pests and diseases on their crops. Utilizing satellite imagery, historical data, and AI algorithms, these solutions identify and detect insect activity or disease outbreaks, enabling timely interventions. Early detection minimizes crop losses, ensures higher-quality yields, and reduces dependence on chemical pesticides, thereby promoting sustainable farming practices.

Commerce has been the incubation center for many things AI. From amazon recommendation in 2003, to Uniqlo’s first magic mirror in 2012, to TikTok’s addictive product recommendations to generative images being used now.

We believe that AI has a role to play in all dimensions of commerce from

- Improving the experience for shoppers – by right recommendations, great content, interactive conversation experiences or mixed reality as example

- Automating the value chain of eCommerce – by automating content creation using generative product content, metadata, or images, automating support processes using ai to augment robotic automation as examples

- Providing data driven recommendations to organizations – by helping recommend how to respond to changing competition and changing shopper behavior to optimize inventory, price competitively and promote effectively

- Trying our and building new revenue channels and sources – by trying out conversational commerce

Mainstream content is all about B2C, and it is not always clear what it can do for B2B stores.

Here are 5 things B2B commerce providers can do with AI now

- Make your customers feel like VIP with a personalized landing page. Personalized landing pages with relevant recommendations can help accelerate buying, improve conversion and showcase your newer product. This helps improve monthly sales booking, improves new product performance, expand monthly recurring revenue, and improve journey efficiency. Personalization technologies, recommendation engines and personalized search technologies are mature to implement a useful landing page today.

- Ease product content and classification with generative AI: Reduce time in creating a high-quality persuasive product description with relevant metadata and classification to ease finding the product. Help improve discovery by having expanded the tags and categories automatically. While earlier LLMs needed a large product description as a starting point to generate relevant tags and content, some LLMs now support generating tags from small product descriptions that fits B2B commerce.

- Recommend a basket with must buy and should buy items. Using a customer’s purchase history and contract, create one or more recommended baskets with the products and quantities they are likely to need along with one or two cross sell recommendations. Empower your sales team with the same which can help them recommend products or take orders on behalf of customers. ML based order recommendation is mature and can factor in seasonality, business predictions and external factors apart from a trendline of past purchases.

- Optimize inventory and procurement with location, customer, and product level demand prediction. Reduce stockouts, reduce excess inventory, reduce wastage of perishables, and reduce shipping times by projecting demand by product by customer for each location.

- Hyper-automate customer support: With advent of large language models, chat bots now offer a much better interaction experience. However, the bot experience must not be restricted to answering questions from knowledgebase, the bot should help resolve customer request with automation enabled with integration, AI based decisioning and RPA.

Introduction

Large Language Models have taken the AI community by storm. Every day, we encounter stories about new releases of Large Language Models (LLMs) of different types, sizes, the problems they solve, and their performance benchmarks on various tasks. The typical scenarios that have been discussed include content generation, summarization, question answering, chatbots, and more.

We believe that LLMs possess much greater Natural Language Processing (NLP) capabilities, and their adaptability to different domains makes them an attractive option to explore a wider range of applications. Among the many NLP tasks they can be employed for, one area that has received less attention is Named Entity Recognition (NER) and Extraction. Entity Extraction has broader applicability in document-intensive workflows, particularly in fields such as Pharmacovigilance, Invoice Processing, Insurance Underwriting, and Contract Management.

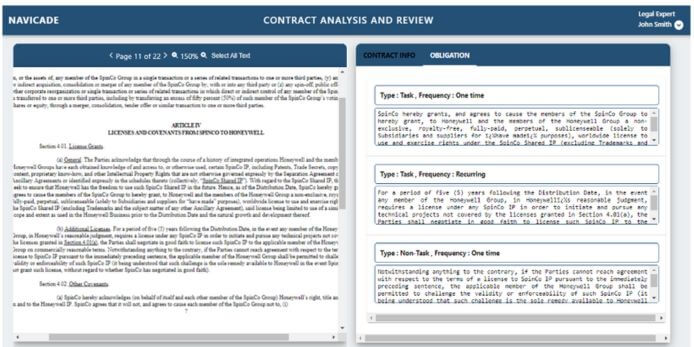

In this blog, we delve into the utilization of Large Language Models in contract analysis, a critical aspect of contract management. We explore the scope of Named Entity Recognition and how contract extraction differs when using LLMs with prompts compared to traditional methods. Furthermore, we introduce NaviCADE, our in-house solution harnessing the power of LLMs to perform advanced contract analysis.

Named Entity Recognition

Named Entity Recognition is an NLP task that identifies entities present in text documents. General entity recognizers perform well in detecting entities such as Person, Organization, Place, Event, etc. However, their usage in specialized domains such as healthcare, contracts, insurance, etc. is limited. This limitation can be circumvented by choosing the right datasets, curating data, customizing models, and deploying them.

Customizing Models

The classical approach to models involves collecting a corpus of domain-specific data, such as contracts and agreements, manually labeling the corpus, and training it with robust hardware infrastructure, benchmarking the results. While people have found success with this approach using SpaCy or BERT-based embeddings to fine-tune models, the manual labeling effort and training costs involved are high. Moreover, these models do not have the capability to detect entities that were not present in the training data. Additionally, the classical approach is ineffective in scenarios with limited or no data.

The emergence of LLMs has brought about a paradigm shift in the way models are conceptualized, trained, and used. A Large Language Model is essentially a few-shot learner and a multi-task learner. Data scientists only need to present a few demonstrations of how entities have been extracted using well-designed prompts. Large language models leverage these samples, perform in-context learning, and generate the desired output. They are remarkably flexible and adaptable to new domains with minimal demonstrations, significantly expanding the applicability of the solution’s extraction capabilities across various contexts. The following section describes a scenario where LLMs were employed.

Contract Extraction Using LLM

Compliance management is a pivotal component of contract management, ensuring that all parties adhere to the terms, conditions, payment schedules, deliveries, and other obligations outlined in the contracts. Efficiently extracting key obligations from documents and storing them in a database is crucial for maximizing value. The current extraction process is a combination of manual and semi-automated methods, yielding mixed results. Improved extraction techniques have been used by NaviCADE to deliver significantly better results.

NaviCADE

NaviCADE is a one-stop solution for all data extraction from documents. It is built on cloud services such as AWS to process documents of different types coming from various business functions and industries. NaviCADE has been equipped with LLM capabilities by selecting and fine-tuning the right models for the right purposes. These capabilities have enabled us to approach the extraction task using well-designed prompts comprising of instruction, context, and few-shot learning methods. NaviCADE can process different types of contracts, such as Intellectual Property, Master Services Agreement, Marketing Agreement, etc.

A view of the NaviCADE application is attached below, displaying contracts and the extracted obligations from key sections of a document. Additionally, NaviCADE provides insights into the type and frequency of these obligations.

In Conclusion

Large Language Models (LLMs) have ushered in a new era of Named Entity Recognition and Extraction, with applications extending beyond conventional domains. NaviCADE, our innovative solution, showcases the power of LLMs in contract analysis and data extraction, offering a versatile tool for industries reliant on meticulous document processing. With NaviCADE, we embrace the evolving landscape of AI and NLP, envisioning a future where complex documents yield valuable insights effortlessly, revolutionizing compliance, efficiency, and accuracy in diverse sectors.

These are exciting times for the people function. Businesses are facing higher people costs, a greater impact due to the quality of people and leadership skills, talent shortages, and skill evolution. This is the perfect opportunity to become more intelligent and add direct value to the business.

The New G3

Ram Charan, along with a couple of others, wrote an article for HBR about how the new G3 (a triumvirate at the top of the corporation that includes the CFO and CHRO) can drive organizational success. Forming such a team is the best way to link financial numbers with the people who produce them. While the CFO drives value by presenting financial data and insights, the CHROs can create similar value by linking various data related to people and providing insights for decision-making across the organization. Company boards are increasingly seeking such insights and trends, leading to the rise of HR analytics teams. Smarter CHROs can derive significant value from people insights.

Interestingly, during my career, I have observed that while most successful organizations prioritize data orientation, many tend to deep dive into data related to marketing and warehousing, but not as much into people data. Often, HR data is treated as a mere line item on the finance SG&A sheet, hidden and overlooked. Without accurate data and insights, HR encounters statements like “I know my people,” which can undermine the function’s credibility. Some organizations excel in sales and marketing analytics but struggle to compile accurate data on their full-time, part-time, and contract workforce.

HR bears the responsibility of managing critical people data. Although technology has evolved, moving from physical file cabinets to the cloud, value does not solely come from tech upgrades.

Democratizing data and insights and making them available to the right stakeholders will empower people to make informed decisions. Leveraging technology to provide data-driven people insights ensures a consistent experience across the organization, leading to more reliable decision-making by managers and employees.

Let me provide examples from two organizations I was part of:

In the first organization, we faced relatively high turnover rates, and the HR business partners lacked data to proactively manage the situation. By implementing systems to capture regular milestone-driven employee feedback and attrition data, HR partners and people managers gained insights and alerts, enabling them to engage and retain key employees effectively.

Another firm successfully connected people and financial data across multiple businesses, analyzing them in context to provide valuable insights. The CHRO suggested leadership and business changes based on these insights.

Other use cases for people insights include:

- Comparing performance metrics (e.g., sales, production units, tech story points) to compensation levels.

- Analyzing manager effectiveness in relation to their operational interactions with employees.

- Personalizing career paths and recommending tailored learning opportunities.

- Creating an internal career marketplace.

- Conducting sentiment analysis and studying people connections (relationship science) within organizations through meeting schedules, internal chats, email chains, etc., to gauge people engagement, employee wellbeing, and leadership traits.

All of this is possible when HR looks beyond pure HR data and incorporates other related work data (e.g., productivity, sales numbers) to generate holistic insights.

From my experience, HR teams excel at finding individual solutions. However, for HR to make a substantial impact, both issues and solutions need to be integrated. The silo approach, unfortunately, is prevalent in HR.

Data has the power to break down these silos. People data’s true potential is realized when different datasets are brought together to answer specific questions, enabling HR teams to generate real value. These insights can then be translated to grassroots decision-making, where people decisions need to be made.

Introduction

In today’s competitive business landscape, small and medium-sized businesses (SMBs) face constant challenges to streamline their operations and maximize profits. One powerful tool that can help SMBs lead cost optimization is a well-thought-out data strategy. Forbes reported that the amount of data created and consumed in the world increased by almost 5000% from 2010 to 2020. According to Gartner, 60 percent of organizations do not measure the costs of poor data quality. A lack of measurement results in reactive responses to data quality issues, missed business growth opportunities, and increased risks. Today, no company can afford not to have a plan on how they use their data. By leveraging data effectively, SMBs can make informed decisions, identify cost-saving opportunities, and improve overall efficiency. In this blog, we will explore how SMBs can implement a data strategy to drive cost optimization successfully.

Assess Your Data Needs

To begin with, it’s essential to assess the data requirements of your SMB. What kind of data do you need to collect and analyze to make better decisions? Start by identifying key performance indicators (KPIs) that align with your business goals. This could include sales figures, inventory levels, customer feedback, and more. Ensure you have the necessary data collection tools and systems in place to gather this information efficiently.

Centralize Data Storage

Data is scattered across various platforms and departments within an SMB, making it challenging to access and analyze. Consider centralizing your data storage in a secure and easily accessible location, such as a cloud-based database. This consolidation will help create a single source of truth for your organization, enabling better decision-making and cost analysis. Also, ensure that your technology choices align with your business needs. You can understand your storage requirements by answering a few questions, such as:

- How critical and sensitive is my data?

- How large is my data set?

- How often and quickly do I need to access my data?

- How much can my business afford?

Use Data Analytics Tools

The real power of data lies in its analysis. Invest in user-friendly data analytics tools that suit your budget and business needs. These tools can help you identify patterns, trends, and areas where costs can be optimized. Whether it’s tracking customer behavior, analyzing production efficiency, or monitoring supply chain costs, data analytics can provide valuable insights.

Identify Cost-Saving Opportunities

Once you have collected and analyzed your data, you can start identifying potential cost-saving opportunities. Look for inefficiencies, wasteful spending, or areas where resources are underutilized. For instance, if you notice excess inventory, you can implement better inventory management practices to reduce holding costs. Data-driven insights will allow you to make well-informed decisions and prioritize cost optimization efforts.

Implement Data-Driven Decision Making

Gone are the days of relying solely on gut feelings and guesswork. Embrace a data-driven decision-making culture within your SMB. Encourage your teams to use data as the basis for their choices. From marketing campaigns to vendor negotiations, let data guide your actions to ensure you are optimizing costs effectively.

Monitor and Measure Progress

Cost optimization is an ongoing process, and your data strategy should reflect that. Continuously monitor and measure the impact of your cost-saving initiatives. Set up regular checkpoints to evaluate the progress and make adjustments as needed. Regular data reviews will help you stay on track and identify new opportunities for improvement.

Ensure Data Security and Compliance

Data security and privacy are paramount, especially when dealing with sensitive information about your business and customers. Implement robust data security measures to safeguard your data from breaches and unauthorized access. Additionally, ensure that your data practices comply with relevant regulations and laws to avoid potential penalties and liabilities.

Conclusion

A well-executed data strategy can be a game-changer for SMBs looking to lead cost optimization. By leveraging data effectively, SMBs can make smarter decisions, identify cost-saving opportunities, and achieve greater efficiency. Remember to start by assessing your data needs, centralize data storage, and invest in data analytics tools. Keep your focus on data-driven decision-making and continuously monitor progress to stay on track. With a solid data strategy in place, your SMB can thrive in a competitive market while optimizing costs for sustained growth and success. If you need any help in your data journey, please feel free to reach out.